Llama3 Chat Template - The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: On generating this token, llama 3 will cease to generate more tokens. This code snippet demonstrates how to. In this tutorial, we’ll cover what you need to know to get you quickly. We’re on a journey to advance and democratize artificial intelligence through open source and open science. A prompt can optionally contain a single system message, or multiple.

A prompt can optionally contain a single system message, or multiple. On generating this token, llama 3 will cease to generate more tokens. In this tutorial, we’ll cover what you need to know to get you quickly. The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: We’re on a journey to advance and democratize artificial intelligence through open source and open science. This code snippet demonstrates how to.

This code snippet demonstrates how to. On generating this token, llama 3 will cease to generate more tokens. We’re on a journey to advance and democratize artificial intelligence through open source and open science. A prompt can optionally contain a single system message, or multiple. In this tutorial, we’ll cover what you need to know to get you quickly. The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows:

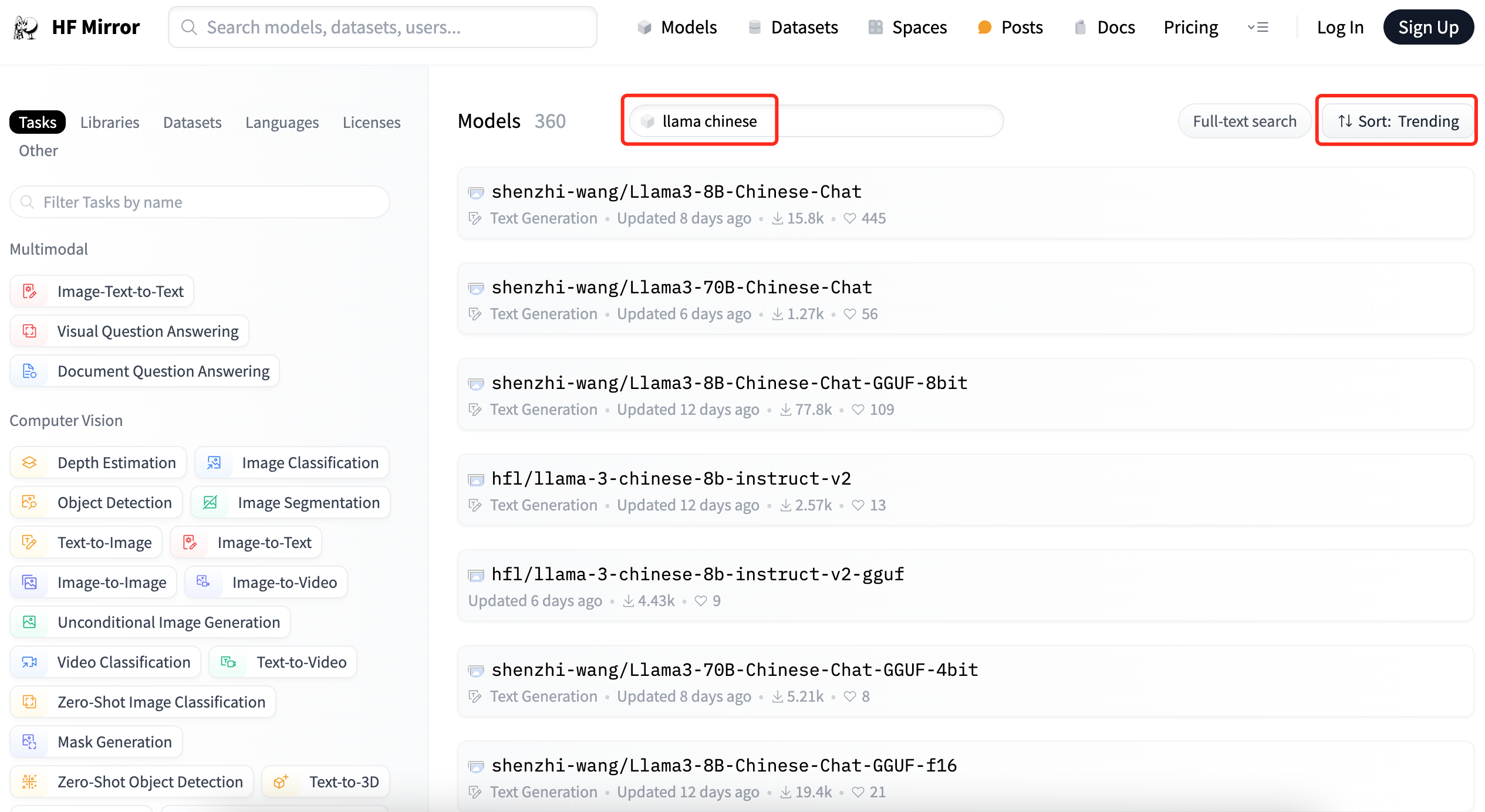

基于Llama 3搭建中文版(Llama3ChineseChat)大模型对话聊天机器人 老牛啊 博客园

A prompt can optionally contain a single system message, or multiple. We’re on a journey to advance and democratize artificial intelligence through open source and open science. The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: On generating this token, llama 3 will cease to generate more tokens. In this tutorial, we’ll cover.

Unleashing the Power of Llama 3 A Comprehensive Guide Fusion Chat

On generating this token, llama 3 will cease to generate more tokens. In this tutorial, we’ll cover what you need to know to get you quickly. This code snippet demonstrates how to. We’re on a journey to advance and democratize artificial intelligence through open source and open science. A prompt can optionally contain a single system message, or multiple.

wangrice/ft_llama_chat_template · Hugging Face

In this tutorial, we’ll cover what you need to know to get you quickly. The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: On generating this token, llama 3 will cease to generate more tokens. A prompt can optionally contain a single system message, or multiple. This code snippet demonstrates how to.

antareepdey/Medical_chat_Llamachattemplate · Datasets at Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science. A prompt can optionally contain a single system message, or multiple. The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: This code snippet demonstrates how to. On generating this token, llama 3 will cease to generate more.

Llama3 Full Rag Api With Ollama Langchain And Chromadb With Flask Api

The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: In this tutorial, we’ll cover what you need to know to get you quickly. We’re on a journey to advance and democratize artificial intelligence through open source and open science. A prompt can optionally contain a single system message, or multiple. On generating this.

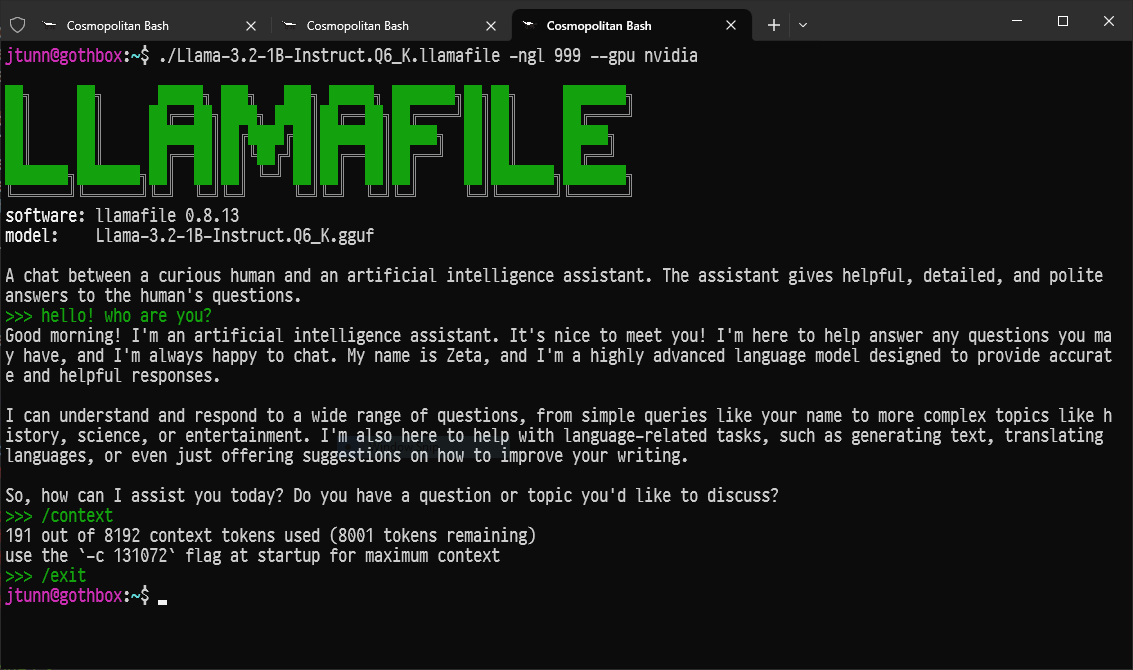

Mozilla/Llama3.21BInstructllamafile · Hugging Face

On generating this token, llama 3 will cease to generate more tokens. A prompt can optionally contain a single system message, or multiple. The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: This code snippet demonstrates how to. In this tutorial, we’ll cover what you need to know to get you quickly.

nvidia/Llama3ChatQA1.58B · Chat template

A prompt can optionally contain a single system message, or multiple. This code snippet demonstrates how to. We’re on a journey to advance and democratize artificial intelligence through open source and open science. On generating this token, llama 3 will cease to generate more tokens. In this tutorial, we’ll cover what you need to know to get you quickly.

shenzhiwang/Llama38BChineseChat · What the template is formatted

In this tutorial, we’ll cover what you need to know to get you quickly. The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: On generating this token, llama 3 will cease to generate more tokens. This code snippet demonstrates how to. A prompt can optionally contain a single system message, or multiple.

vllm/examples/tool_chat_template_llama3.2_json.jinja at main · vllm

We’re on a journey to advance and democratize artificial intelligence through open source and open science. The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: This code snippet demonstrates how to. A prompt can optionally contain a single system message, or multiple. In this tutorial, we’ll cover what you need to know to.

基于Llama 3搭建中文版(Llama3ChineseChat)大模型对话聊天机器人_llama38bchinesechatCSDN博客

In this tutorial, we’ll cover what you need to know to get you quickly. We’re on a journey to advance and democratize artificial intelligence through open source and open science. On generating this token, llama 3 will cease to generate more tokens. The chat template, bos_token and eos_token defined for llama3 instruct in the tokenizer_config.json is as follows: This code.

In This Tutorial, We’ll Cover What You Need To Know To Get You Quickly.

On generating this token, llama 3 will cease to generate more tokens. A prompt can optionally contain a single system message, or multiple. We’re on a journey to advance and democratize artificial intelligence through open source and open science. This code snippet demonstrates how to.